Working with Unity3D under Diana Ford, we created a VR Director Toolkit that could effortlessly help creators track and maintain the gaze of their viewers in virtual reality. The culmination of our teams' work was presented at the Unity booth at Siggraph 2016.

As VR allows the user an almost unlimited amount of freedom when it comes to a players' gaze, tooling is necessary to make sure the user's eyes were focused on what the director wants them to see. Forcing the user to look a particular way felt unnatural, so having smart methods to grab their attention and focus it naturally was the best course of action.

Part of my research revolved around choosing the most natural method to focus the players eyesight/gaze. We developed countless methods for doing so, and needed a way to learn which ones worked best when.

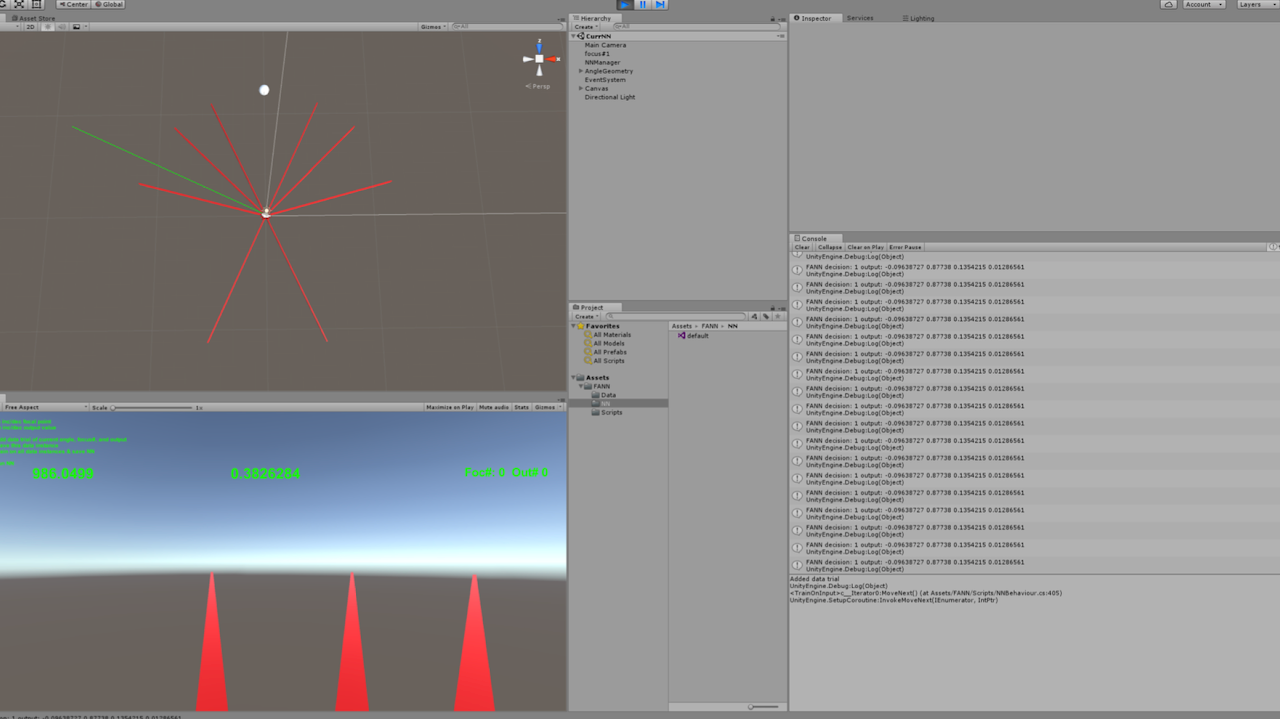

I implemented a c++ neural network to track the angle of the users gaze as well as times during the experience.

We had the model train on over 100 people to best determine what methods worked at which points of the experience, depending on which direction the user was looking at the time.

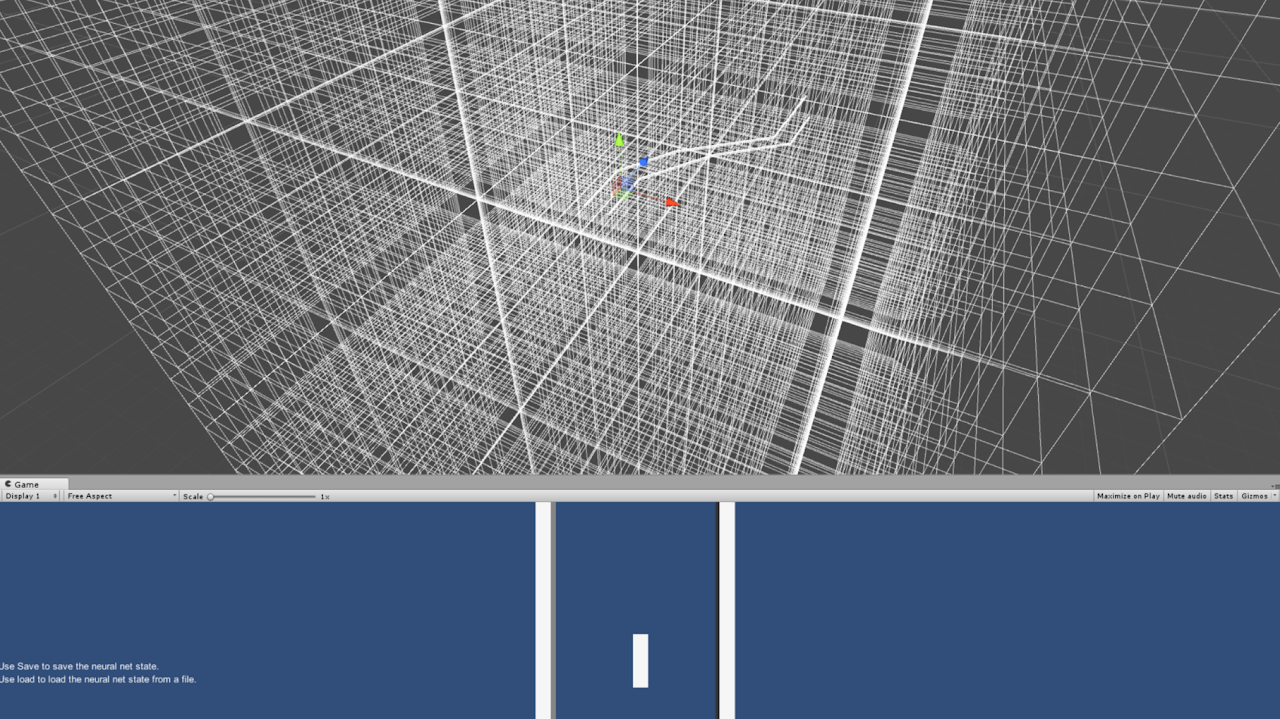

I also implemented a voxelization system, whos data was also fed into the learning model. The voxelization system tracked the entire cinematic experience and kept track of what objects the user's gaze collided with.

This was a more accurate method of tracking what the user was looking at and when.